In this article, I shall demonstrate including an automated Tableau workbook refresh as part of a data transformation refresh process |

Whilst Tableau Server does have a built-in scheduler for users to schedule refreshes of data whether they be workbook extracts, or published extracts, sadly, the scheduler is quite limited in function.

As long as the server is able to reach the data source, then the server and workbook are able to report that the refresh attempt was successful. Attempt - this is the key word, so even if no data had been transferred, or even if the table had been truncated before hand, Tableau Server is still correct in marking the refresh as successful.

Reports and dashboards require data in order to function, but also require the latest data in order to be useful. A dashboard that does not contain the latest available data is about as useful as a waterproof teabag. So a scheduler that is not capable of profiling the data source ahead of a refresh, is also not very useful as it could end-up just refreshing the same data yet reporting that the latest data has been loaded, or worse, in the event that the source table has been truncated, Tableau will actually import No data.

Import no data is not the same as no import or importing nothing, if the data source is empty as it has been truncated or rebuilt following corruption, then this is what Tableau shall import: scrubbing the existing data in the extract. |

One potential method of avoiding such a problem would be to use an incremental refresh, though this requires a field to be targeted such as a date or row number; but what happens if you have a less-than-straightforward setup? Maybe this is a rolling year-and-a-day dashboard: as data is reprocessed, you can perform a daily refresh just to bring through the latest data, but, on the first of each month, the whole data-set needs to be dropped and the entire last 12 months needs to be brought in.

Tools like Alteryx and Tableau Prep can perform similar tasks like this, though they too are still limited to only bringing through data when it is ready: there is no failsafe if the data simply isn't ready yet as its still in transit; the only alternative in this situation is "wait until tomorrow" - not great for mission-critical data.

This is exactly the problem encountered by Madhu Latha Doddala in their real-world Tableau Forum post: schedule a incremental refresh daily and full refresh once a month to the same data extract (tableau.com) perfect timing for this article, so I used this article to help him tackle his more complex requirements; with a couple of added extras of my own:

So including the refresh as part of the etl build is the safest method to ensuring that the data is refreshed just-in-time. What's more is, that by building the refresh process directly into the etl mechanism, we can go beyond the basics of the Tableau Server refresh and even the notifications.

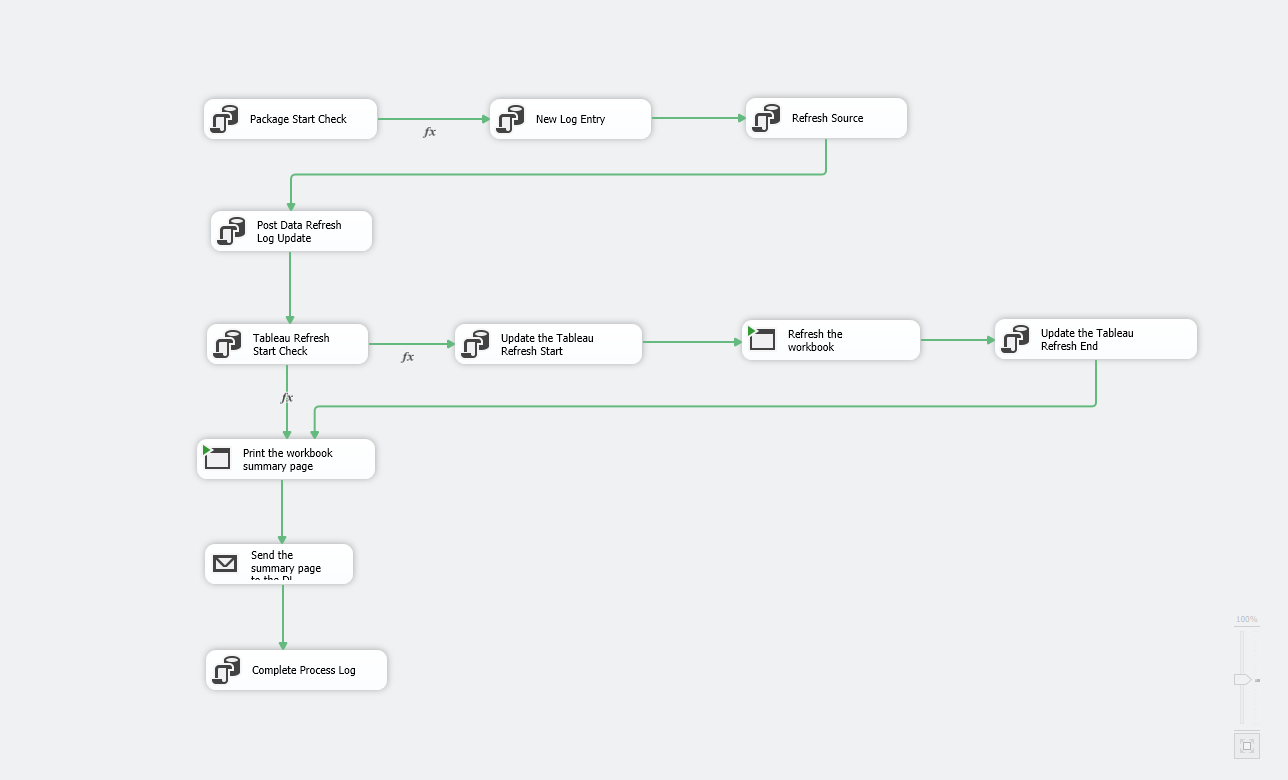

This full build will demonstrate:

All in a complete package that can be easily deployed and later modified.

This complete guide has been broken-down to the following sections:

We will be creating several types of code as we go, though the pieces we want to focus on here are:

It all begins with a log. The log controls everything: it determines whether the process can initiate, and in some bigger etl's, I use a log for stage processing; this way, if a stage fails to complete, or needs to be reprocessed, I can simply kick-off the whole etl process knowing only the failed stages will be processed. Furthermore, as emails are also included in the process, the tech team are only notified if part or all of a process has failed, and using this log, I can include the tables that failed.

So as a minimum, our log will need:

Well, it is a log after all. Sure, there are a number of fields that may not seem too relevant for controlling an etl process, and you'd be correct; only the fields coloured orange are all that are required, but for the sake of a few extra lines of code, we can plug the log into another dashboard (tool of choice but if Tableau is in your arsenal, it may as well be this), and this way, you can keep a proactive analysis of the etl performance: sudden spikes in record counts or processing times etc allow you the time to look for the cause before they become a real problem.

The necessary fields - the orange ones:

Finally, unless you have a multi-refresh set-up in which case the date_time fields will become so much more important, it is the case that there should be just one completed entry per refresh, as a new record is only created and populated when the etl begins.

Create Table dbo.Company_KPI_Log ( KPI_Log_ID Int Identity(1,1) Not Null Constraint pk_KPI_Log Primary Key Clustered(KPI_Log_ID) ,Process_Start_Date Date Null ,Process_Start_DateTime DateTime Null ,Process_End_Date Date Null ,Process_End_DateTime DateTime Null ,Process_Complete_Flag TinyInt Null ,Processing_Time Time Null ,Data_Process_Start_DateTime DateTime Null ,Data_Process_End_DateTime DateTime Null ,Record_Count Int Null ,RecordSet_Max_Date Date Null ,Tableau_Refresh_Start_DateTime DateTime Null ,Tableau_Refresh_End_DateTime DateTime Null ); |

All the fields need to be defined as null, as they shall be updated as each process stage completes. And, although I have written this for SQL Server, I have kept it as close to SQL ANSI-11 as possible, the field "Process_Complete_Flag" should be a boolean field (bit in SQL Server with a data size of 1 byte), I have defined this to a TinyInt which in SQL Server can hold a value of between 0 to 255 but is also 1 byte in size, so has the same data size as bit which can only hold 0 or 1 (False or True) |

Lets create the table now. I have created this as a sproc, this is so I can include it as a failsafe in the main etl code: If the table is not present, then recreate it so as to prevent the main etl bugging-out when it comes to load the data to a table that does not exist. Also, you will notice that this script creates two identical tables - the actual table, and a staging table. This is so we can load the data to the staging table first, check to ensure the data is present before then loading into the main table. This process allows for a failsafe, to retain the existing data in the event that no newer data is available at the time of the refresh.

We won't create this straight away, we'll let the etl script handle this later; this way, we know the failsafe is working:

Create Procedure sp_Company_KPI_Data_Table As

Create Table dbo.Company_KPI_Data_Load (

Company_KPI_DL_ID Int Identity(1,1) Not Null

Constraint pk_Company_KPI_DL Primary Key Clustered(Company_KPI_DL_ID)

,Order_ID Varchar(20) Null

,Order_Date Date Null

,Ship_Date Date Null

,Ship_Mode Varchar(20) Null

,Customer_ID Varchar(10) Null

,Customer_Name Varchar(30) Null

,Segment Varchar(20) Null

,Country_Region Varchar(20) Null

,City Varchar(20) Null

,[State] Varchar(20) Null

,Postal_Code Int Null

,Region Varchar(10) Null

,Product_ID Varchar(20) Null

,Category Varchar(20) Null

,Sub_Category Varchar(20) Null

,Product_Name Varchar(130) Null

,Sales Real Null

,Quantity Smallint Null

,Discount Real Null

,Profit Real Null

);

-- Checks for the presence of the staging table, drops and recreates

If Object_ID('Company_KPI_Data_Load_stg') Is Not Null Drop Table Company_KPI_Data_Load_stg;

Create Table dbo.Company_KPI_Data_Load_stg (

Company_KPI_DL_ID Int Identity(1,1) Not Null

Constraint pk_Company_KPI_DL Primary Key Clustered(Company_KPI_DL_ID)

,Order_ID Varchar(20) Null

,Order_Date Date Null

,Ship_Date Date Null

,Ship_Mode Varchar(20) Null

,Customer_ID Varchar(10) Null

,Customer_Name Varchar(30) Null

,Segment Varchar(20) Null

,Country_Region Varchar(20) Null

,City Varchar(20) Null

,[State] Varchar(20) Null

,Postal_Code Int Null

,Region Varchar(10) Null

,Product_ID Varchar(20) Null

,Category Varchar(20) Null

,Sub_Category Varchar(20) Null

,Product_Name Varchar(130) Null

,Sales Real Null

,Quantity Smallint Null

,Discount Real Null

,Profit Real Null

); |

So now we have the log table, lets now get on with the code.

This code doesn't do anything special, simply selects some data from one table and moves it to another, the only difference is how much data is being moved: On the 1st of the month, the table is truncated and the last 365 days are transferred, whereas any other day, we are just transferring the number of days that have lapsed and become available since the last run, which we would normally expect to be one day.

Create Procedure sp_Company_KPI_Build As

-- Part 1: Identify the last run date:

Declare @Run_Date Date

-- In line with the requirement, when the date is the 1st of the month, run_date needs to be set to 365 days ago

Select @Run_Date = RecordSet_Max_Date From Company_KPI_Log Where KPI_Log_ID = (Select Max(KPI_Log_ID) From Company_KPI_Log)

If Datepart(day,GetDate()) = 1 Set @Run_Date = DateAdd(day,-365,@Run_Date)

-- Part 2: Prepare the table:

-- Step 1: If the table does not exist, create it

If Object_ID('Company_KPI_Data_Load') Is Null Exec sp_Company_KPI_Data_Table;

-- Step 2: Truncate the staging table

Truncate Table Company_KPI_Data_Load_stg

-- Step 3: Load the data to staging

Insert Into Company_KPI_Data_Load_stg

Select

Order_ID

,Order_Date

,Ship_Date

,Ship_Mode

,Customer_ID

,Customer_Name

,Segment

,Country_Region

,City

,[State]

,Postal_Code

,Region

,Product_ID

,Category

,Sub_Category

,Product_Name

,Sales

,Quantity

,Discount

,Profit

From dbo.Orders

Where Order_Date > @Run_Date;

-- Part 3:

-- Step 1: Now check the data in the staging table. This is a simple row-count to ensure that data has been loaded. Exit script if the check fails

If (Select Count(1) From Company_KPI_Data_Load_stg) < 1 Return

-- Step 2: Now we'll truncate the data if its the first of the month:

If Datepart(day,GetDate()) = 1 Truncate Table Company_KPI_Data_Load

-- Step 3: Now we can load the data to the production table

Insert Into Company_KPI_Data_Load

Select

Order_ID

,Order_Date

,Ship_Date

,Ship_Mode

,Customer_ID

,Customer_Name

,Segment

,Country_Region

,City

,[State]

,Postal_Code

,Region

,Product_ID

,Category

,Sub_Category

,Product_Name

,Sales

,Quantity

,Discount

,Profit

From Company_KPI_Data_Load_stg |

So now we have the code to run the etl process, and the log file that controls it, we now have two final scripts to write: the Tableau ones.

The first, the refresh script is simply a login to Tableau Server, and initiate the workbook refresh, which will refresh all the extracts contained therein:

(I'm assuming that you have installed tabcmd, and moved it to the root directory of the pc/server that shall be executing the etl, and that you have created a scripts folder for your tabcmd scripts as detailed here: Tableau Server Automation - On a Tableau Quest... - Confluence (atlassian.net))

cd c:\tabcmd\tabcmd tabcmd login -s <your server> -u <your username> -p <your password> tabcmd refreshextracts --workbook "Comapny_KPI" tabcmd logoff |

Always remember the logoff to close the session when you have completed your commands

Save this to the scripts directory (C:\tabcmd\scripts) as company_kpi_refresh.bat

Now the print-out, I would recommend another directory within tabcmd called "prints", and whilst its possible to print the entire workbook to pdf - useful for leaders who need a complete overview before they reach the office network, and a high-level summary just won't do, in this instance, I shall only export the Summary dashboard page, and I shall assume you are using only a single site, ,otherwise you would need the -t switch immediately before the workbook location

cd c:\tabcmd\tabcmd tabcmd login -s <your server> -u <your username> -p <your password> tabcmd export "Company_KPI/Summary" --png -f "C:\tabcmd\prints\company_kpi.png" tabcmd logoff |

Save this as company_kpi_daily_print.bat

Now we have finished the major scripting we can get on with the rest of the automation.

Open SQL Server Integration Services in MS Visual Studio, create a new project, give it a name and press OK to begin the build, this is covered here: Programmatic Workbook Refresh (10 Minute Read) - On a Tableau Quest... - Confluence (atlassian.net) in Step 2

SQLStatement press the ellipses to expand the window and use this code:

Select @RefreshState = Case When Process_End_Date < DateAdd(day,1,GetDate()) Then 1 Else 0 End From Company_KPI_Log Where KPI_Log_ID = (Select Max(KPI_Log_ID) From Company_KPI_Log) |

SQLStatement to

Insert Into Company_KPI_Log Select Cast(GetDate() As Date) As Process_Start_Date ,GetDate() As Process_Start_DateTime ,Null ,Null ,Null ,Null ,GetDate() As Data_Process_Start_DateTime ,Null ,Null ,Null ,Null ,Null |

Expression - you can type in your variable here, or search for it and build the expression (preferred) by clicking the ellipses, the output should resemble

@[User::RefreshState] == 1 |

What this does is only progresses the package to the data refresh if the log check determined that the refresh hadn't taken place today: returns 1

Press OK

SQLStatement:

Update Company_KPI_Log Set Data_Process_End_DateTime = GetDate() ,Record_Count = (Select Count(1) From Company_KPI_Data_Load) ,RecordSet_Max_Date (Select Max(Order_Date) From Company_KPI_Data_Load) Where KPI_Log_ID = (Select Max(KPI_Log_ID) From Company_KPI_Log) |

SQLStatement:

Select @RefreshState = Case Coalesce(Record_Count,0) > 0 Then 1 Else 0 End From Company_KPI_Log Where KPI_Log_ID = (Select Max(KPI_Log_ID) From Company_KPI_Log) |

SQLStatement:

Update Company_KPI_Log Set Tableau_Refresh_Start_DateTime = GetDate() Where KPI_Log_ID = (Select Max(KPI_Log_ID) From Company_KPI_Log) |

Double-click the connection and repeat Step 8 here: Set the Evaluation Operation to Expression and set the Expression to:

@[User::RefreshState] ==1 |

SQLStatement:

Update Company_KPI_Log Set Tableau_Refresh_End_DateTime = GetDate() Where KPI_Log_ID = (Select Max(KPI_Log_ID) From Company_KPI_Log) |

Expression:

@[User::Variable]==0 |

Therefore, if there was no data transferred during step 9, this shall be detected during step 12 above, redirecting the task straight to the generate the workbook image avoiding refreshing the workbook.

SQLStatement:

Update Company_KPI_Log Set Process_End_Date = Cast(GetDate() As Date) ,Process_End_DateTime = GetDate() ,Process_Complete_Flag = 1 ,Processing_Time = GetDate() - Process_Start_DateTime Where KPI_Log_ID = (Select Max(KPI_Log_ID) From Company_KPI_Log) |

This completes the build side, the final step is to deploy the package to SQL Server, and configure the Agent scheduler which is covered from Step 3 in the shorter guide here: Programmatic Workbook Refresh (10 Minute Read) - On a Tableau Quest... - Confluence (atlassian.net)