Tableau Best Practices

Its a sad fact of life but Tableau probably won't be around forever

Certainly as your company seeks out newer tools and data continues to grow exponentially

The problem is, your company knows this and has built, and continues to build systems in order to progress

It is very rare, and in most cases, improbable that your Tableau reports and analyses will consume data from a dedicated for-Tableau optimised data-warehouse, at best, you can hope that a warehouse for visualisation be made available, although most of the time, you will be consuming the same data as everyone else, such that unless you are a member of the BI team, or have ddl (Data Definition Language) access to the databases (required to create and optimise tables, views, etl etc), the chances are, you may not have a chance to optimise the back-end.

Best practice for performance is key to everything we do as Tableau developers; if our reports slow-down or, our reports create bottle-necks further down the chain, you can be guaranteed of the following (in this order):

- Users will tire of waiting, ultimately leading them to find alternatives for their answers

- Our reports may find themselves permanently disabled

- Who needs Tableau when we can write our own SQL and present in Excel? Sure, Tableau is prettier and makes it easier but those reports that slow everything down cannot be used

I actually experienced this myself several years ago: I was hired to fix the reports and improve their performance down from more than 5 minutes to do anything. Things had become so bad that the team had taken to Excel and had ditched the dedicated Tableau reports.

It took 2 months of investigation and re-building from scratch to fix the underlying problems in performance to achieve rendering times of under 10 seconds on a billion-row, 200-column mixed nvarchar / bigint table. Paid-off too as the report is still in use now (4 years later), which is great really given that this is the main R&D platform

I remember my Family Law lecturer when studying for my degree making the point that really, the subject should be called anti-family law as we spend more time trying to find the best approach to amicably break the family apart rather than creating one and/or keeping it together.

Using Tableau is no different: In order to ensure we as developers are getting the best from Tableau, we really need to be asking "is this method the best way to achieve this" or "is Tableau the best tool for the job" or "do we actually need this" and finally "I'm sure we can demonstrate this more simply than that"

Data drives everything.

Including your reports, but data is not always optimised for the job in-hand, and is often unable to be, so really you should see data and data performance as the main drivers behind your reports, don't try and over-wrangle Tableau.

So this where the following guide steps in, and a little more information can be found on the following pages:

Finally, this is my list of items to consider for best practice for all of your analyses and dashboards:

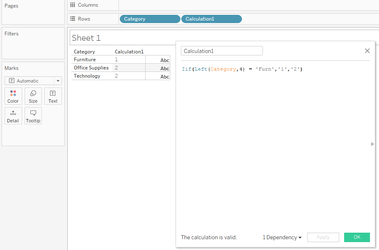

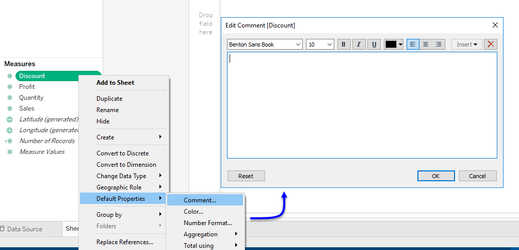

(1.) Only use calculations when you need them:

But calculations are Tableau's bread-and-butter aren't they? What can I possibly do if I cannot write calculations?

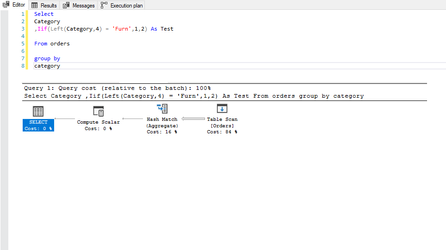

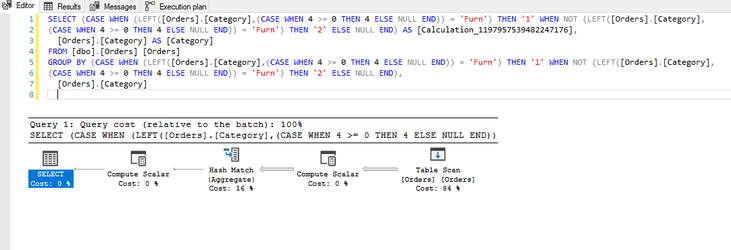

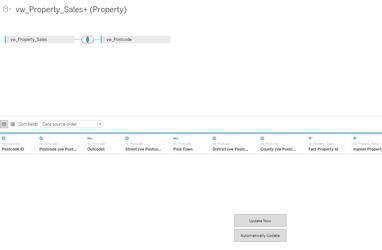

(2.) Wherever possible ALWAYS use CustomSQL

Hold on a moment, the majority of the articles available online and other Tableau users generally advise to avoid CustomSQL and utilise the Tableau data-model, but you are telling me the exact reverse - why?

(3.) Avoid nesting and materialise wherever possible

Ok, I think I understand this one, instead of writing nested queries, you suggest I should create separate calculations for what would be the nested calculations? Why, surely this will lead to more processing

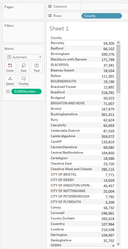

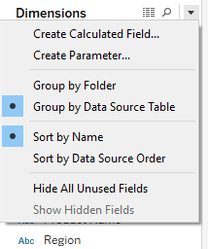

(4.) Use Tableau built-in functions (aliases, groups, sets, bins etc) wherever possible instead of calculations or make the changes in CustomSQL

But calculations are easier and aliases / groups / bins etc may be single-use

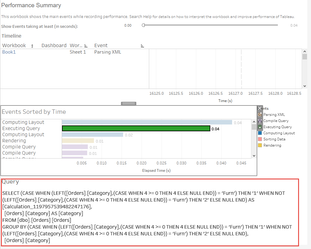

(5.) Always test the performance using the performance recorder

About the Performance Recorder

The recorder simply records all Tableau events to help you identify performance issues.

The recorder is NOT a screen-capture device: it will not record screen activity, webcam or microphone and all recorded activity is saved locally.

(6.) Understand your use-case carefully and consider the best methods for connecting to your data

(7.) Additive Calculations belong in the source

(8.) Understand Tableau's role

(9.) Limit your use of filters

Are you kidding? If I don't have filters, my charts and dashboards will become unusable! It was only a matter of time before these ended-up on your list - killjoy!